Case Study: Can AI Take a Joke—Or Make One?

Exploring humor in AI-powered support systems

Research | UX Strategy | Conversational Design | NLP

Role: UX Researcher & Chatbot Designer

Team: Pavithra Ramakrishnan, Kexin Quan, Dr. Jessie Chin

Time: Aug 2023 – Present

Conference: ACM Creativity & Cognition 2025 (Poster + Publication)

✨ Overview

Can AI be funny—and emotionally supportive?

Humor is one of the most human tools for connection, yet it’s incredibly difficult for machines to get right, especially in emotionally sensitive conversations. We explored whether large language models (LLMs) like ChatGPT, LLaMA3, and Gemini can generate and recognize humor in a way that’s emotionally appropriate, context-aware, and helpful in support-focused interactions.

🔍 The Challenge

In peer coaching, therapy, and academic advising contexts, humor can help ease tension, but if misused, it risks alienating users or eroding trust.

We asked:

🎯 Project Goals

Build and evaluate 6 chatbot personas with different humor styles

Test how well LLMs generate, classify, and respond to humor in support scenarios

Publish results at a leading HCI conference (ACM C&C 2025)

My Role

Designed all 6 chatbot personas (Google Dialogflow + Slack)

Developed evaluation metrics (tone alignment, emotional fit, humor strength)

Led UX research tasks including data labeling, user testing, and prompt tuning

Co-authored the peer-reviewed ACM paper on Overleaf

Designed the conference poster using Microsoft PPT + Adobe Illustrator

Preparing to be a speaking author at ACM Creativity & Cognition 2025

Skills: UX Research · NLP · Conversational Design · Figma · Illustrator · Academic Writing · Python APIs

💡 Research Approach

💡 Research Approach

Task 1

Humor Generation

We prompted GPT-4o, Gemini 1.5, and LLaMA3 to generate messages using three humor styles:

Affiliative (friendly, uplifting)

Self-Defeating (vulnerable, relatable)

No Joke (emotionally direct)

Example prompt → “window, view, weather”

Response (Self-Defeating): “I always dreamed of a beautiful window view… now I just stare at rain and regret.”

60 human raters evaluated AI responses for:

Humor Style Accuracy

Emotional Appropriateness

Humor Strength

Task 2

Humor Recognition

We asked the same LLMs to classify 20 human-written supportive statements, varying both:

Humor Style (Affiliative, Self-Defeating, No Joke)

Speaker Role (Counselor vs. Friend)

This tested whether models could not only be funny but also understand the context in which humor appears.

Tools & Tech

Google Dialogflow + Slack – Chatbot deployment

Figma + Illustrator – Visual design for poster

Prompt engineering + classification

Google Sheets – Rating collection and analysis

Meet the Slack Chatbots

Designed and developed 6 Emotionally Intelligent Chatbots (EICBs) that combined two support roles—friend and counselor—with three distinct humor styles: no joke, affiliative, and self-deprecating. Built using Google Dialogflow and integrated on Slack, the chatbots were used to simulate emotionally supportive conversations in college student contexts.

📊 Outcomes

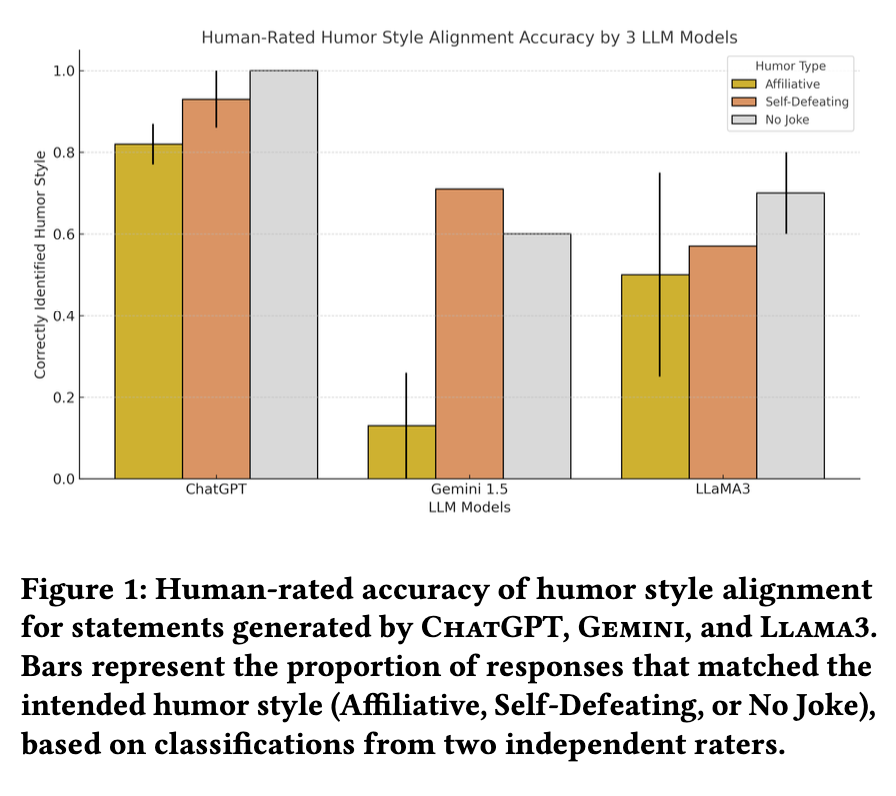

Humor Generation

GPT-4o was most accurate in tone and emotional fit

Affiliative humor was rated the most emotionally resonant, yet hardest to generate

LLaMA3 showed the most variability

Gemini struggled with subtle emotional humor

Humor Recognition

GPT-4o correctly classified humor styles more often than others

LLaMA3 did better at identifying roles (Counselor vs. Friend)

All models blurred tone between formal vs. casual roles, risking misalignment

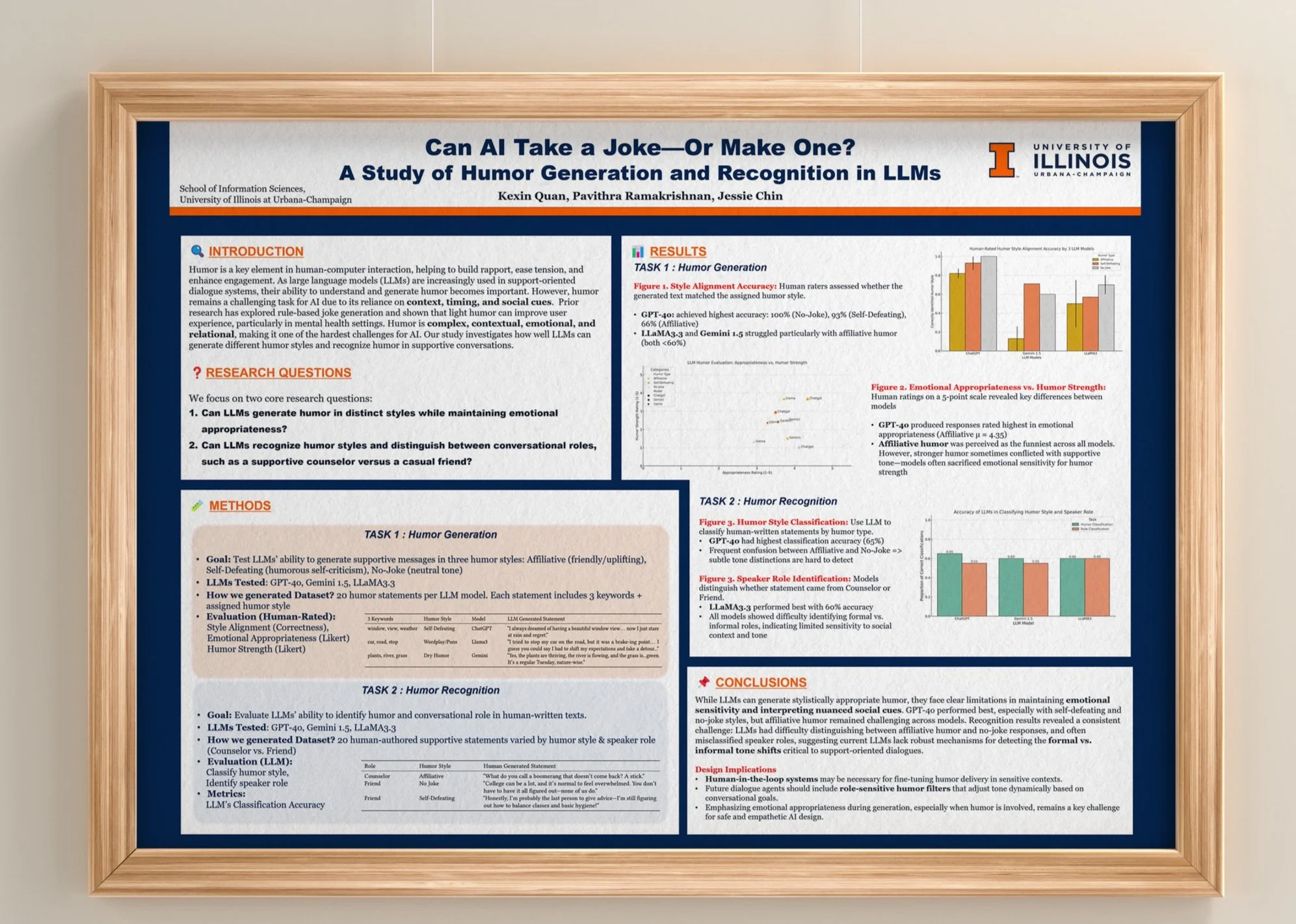

Poster Preview

Accepted to ACM C&C 2025

Designed in Adobe Illustrator, the poster presents all rating metrics and model comparisons visually.

💬 Reflections